Quantformer

How a Transformer-Based Model Is Quietly Redefining Quant Trading

Linear factor models once dominated portfolio construction; machine-learning models like SVMs and LSTMs tried to push the frontier, but struggled with noisy, chaotic market data. And then transformers changed the world of language. So naturally, the next question emerged: could the same architecture that understands text also learn patterns hidden inside financial time series?

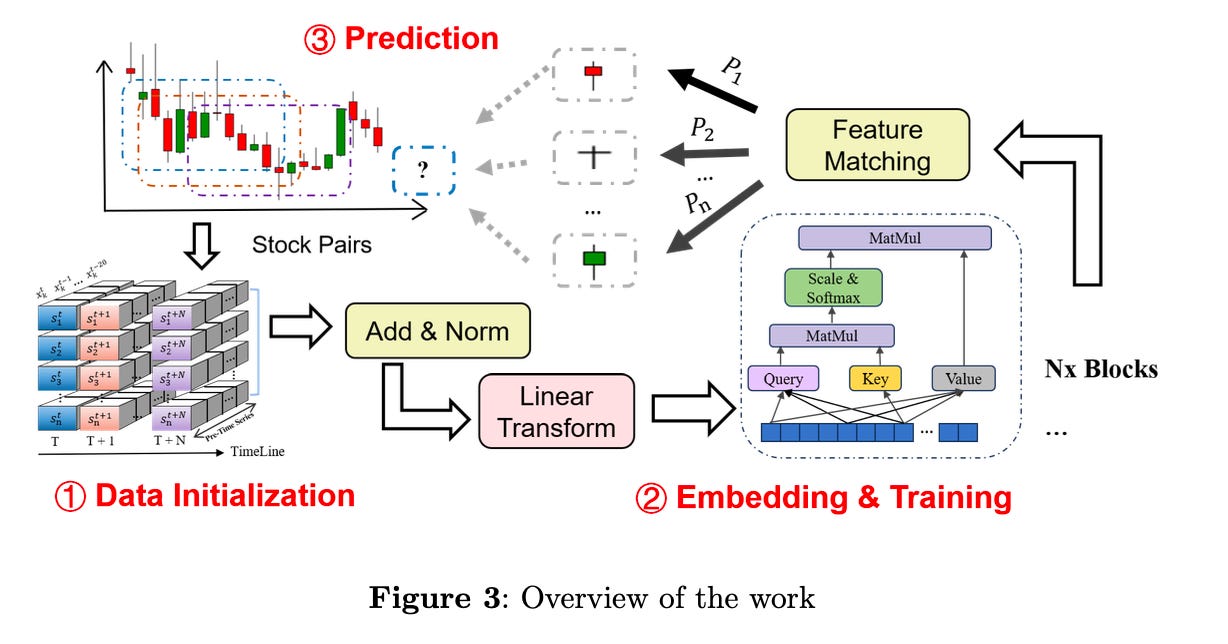

A study “Quantformer: From Attention to Profit with a Quantitative Transformer Trading Strategy” attempts to answer this question. The authors introduce a transformer-like architecture designed not for text, but for stock-market prediction, and they back it with one of the largest datasets ever compiled for the Chinese market: more than 5 million samples across 4,601 stocks from 2010 to 2023.

Their conclusion is that A properly modified transformer not only works for stock prediction, it significantly outperforms 100 traditional price-volume factors, with better returns and better risk-adjusted performance.

Why Transformers Need a Makeover for Markets

Transformers were born for language. They excel because they can represent contextual relationships across long distances in text. But price data is not text, and financial time series do not resemble token sequences. There are immediate problems:

Transformers rely on word embeddings.

Markets contain numeric and categorical inputs such as returns, turnover rates, sectors, not just text.

Transformers are built for sequence-to-sequence tasks.

Translation outputs sentences. Traders need a model to output a single prediction, for example, the probability a stock will outperform next month.

Decoders in transformers depend on autoregressive masking.

This is unnecessary and even harmful when forecasting future returns.

Quantformer’s innovation is to modify the transformer architecture directly:

Replace word embeddings with linear layers tailored to numerical inputs.

Remove the decoder’s autoregressive mechanism entirely.

Keep multi-head attention to capture long-range temporal dependencies in price data.

Output probability distributions across quantiles of future stock performance.

The goal is to turn a transformer into an investment-factor generator.

The Data: 4,601 Stocks, 14 Years, 5 Million Rolling Samples

Keep reading with a 7-day free trial

Subscribe to LLMQuant Newsletter to keep reading this post and get 7 days of free access to the full post archives.